Microsoft's PHI 3.5: A New Era of Multilingual and High-Quality Small Language Models

September 16, 2024

Gizem Argunşah

Microsoft's PHI 3.5: A New Era of Multilingual and High-Quality Small Language Models

In the rapidly developing artificial intelligence landscapes, Microsoft has been showcasing its model, PHI 3.5, as one that is revolutionary and one that pushes the benchmarks of what AI language models can be able to do. As businesses and developers look forward to harnessing AI capabilities for the ever more complex and multilingual contexts, PHI 3.5 is going to be a game changer in terms of capabilities it infuses with benefits.

The most recent PHI 3.5 represents the latest in the PHI series from Microsoft and takes a giant leap forward in technology for these AI language models. In this generation, built from the well-known foundation of PHI 2, the PHI series received many applauds because the natural language it was able to understand and generate was superior. Hence, many applauds were gathered by the PHI series.

Capabilities

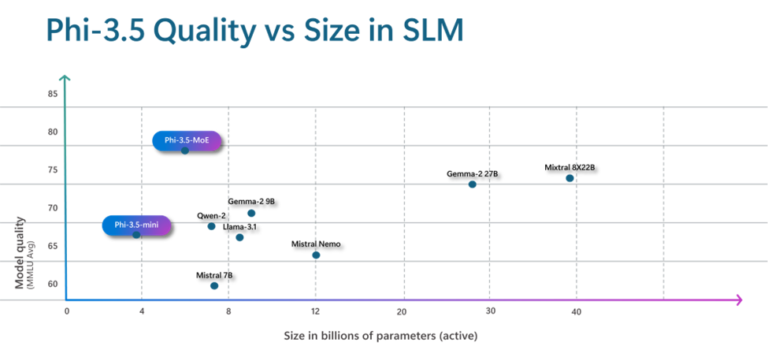

Enhancements for PHI 3.5 continue these enhancements, focusing expressly on fine-tuning the model to thrive within either multilingual contexts or high-quality specialized language modeling (SLM). To see detailed information about SLM, click our blog post on skymod.tech

Key Features of PHI 3.5

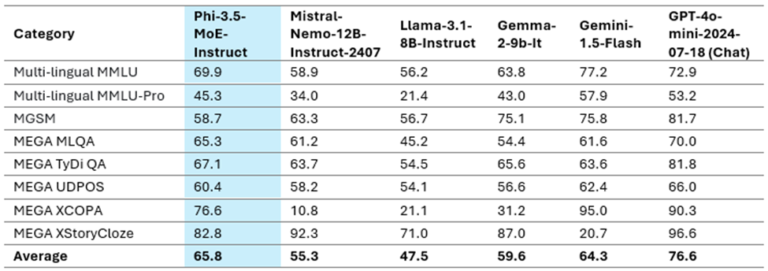

Besides, another important feature of PHI 3.5 is multilingual support. In today’s scenario, where businesses and their scenarios are getting more globalized, there is a tremendous hike in demand for natural language processing in AI to be cross-linguistic; hence, PHI 3.5 has been trained with diverse languages. It understands, generates, and translates text over a wide spectrum of linguistic context. This is a feature with a lot of potential for deployment in AI inside enterprises working in a multi-cultural environment.

Multi-lingual Capability

Specialized Language Models is one of the SLM capabilities of the PHI 3.5. The inevitability of SLM in industries that require language processing, being context-aware, cannot just be underemphasized. PHI 3.5 makes a remarkable stride in the understanding and the generation of specialized terminologies, as also domain-specific content useful for industrial domains such as healthcare, finance, and legal services.

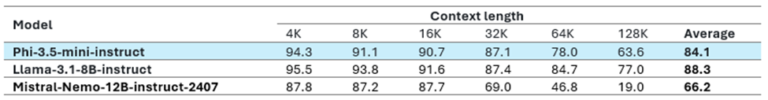

Long Context

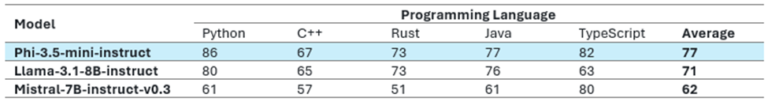

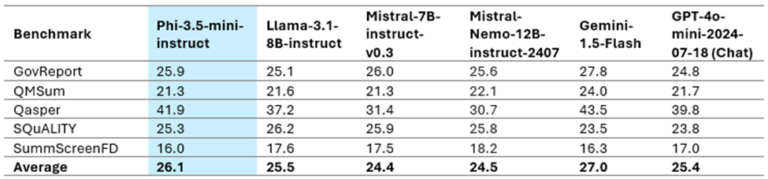

Phi-3.5-mini, with a 128K context length support, excels in tasks like summarizing long documents or meeting transcripts, long document-based QA, and information retrieval. Phi-3.5 performs better than the Gemma-2 family, which supports only an 8K context length. Additionally, Phi-3.5-mini is highly competitive wi.th much larger open-weight models such as Llama-3.1-8B-instruct, Mistral-7B-instruct-v0.3, and Mistral-Nemo-12B-instruct-2407. Tables lists various long-context benchmarks.

Phi-3.5-mini, with a 128K context length support, excels in tasks like summarizing long documents or meeting transcripts, long document-based QA, and information retrieval. Phi-3.5 performs better than the Gemma-2 family, which supports only an 8K context length. Additionally, Phi-3.5-mini is highly competitive with much larger open-weight models such as Llama-3.1-8B-instruct, Mistral-7B-instruct-v0.3, and Mistral-Nemo-12B-instruct-2407.

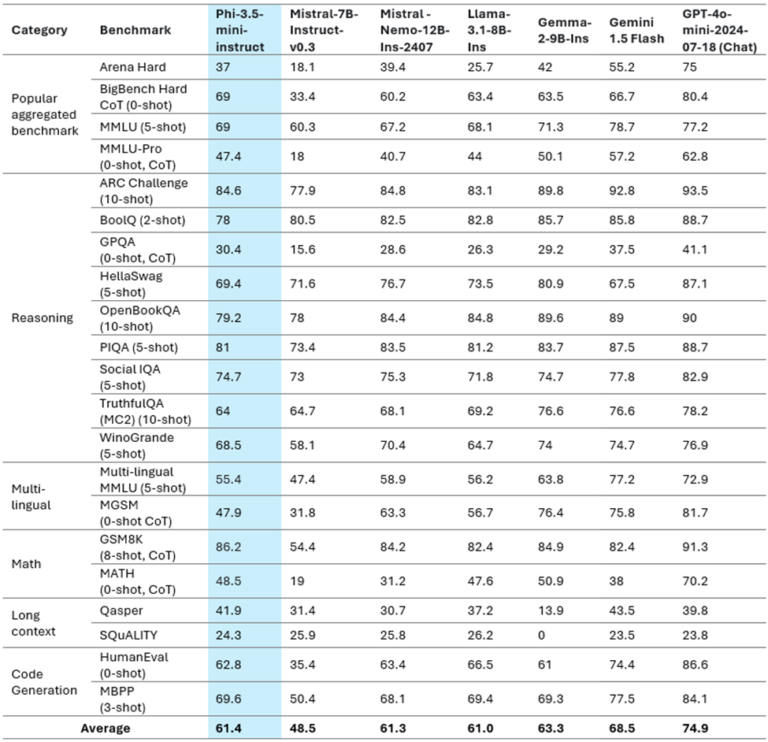

The PHI 3.5 sets new standards in benchmark performance set by the industry. The model was painstakingly aligned against many benchmarks in scientific communities, from those focusing on natural language understanding to translation accuracy and domain-specific tasks. In such evaluations, it has mostly outperformed its precursor, the PHI 2, and many other models that otherwise are top of the market.

Phi-3.5-vision with Multi-frame Input

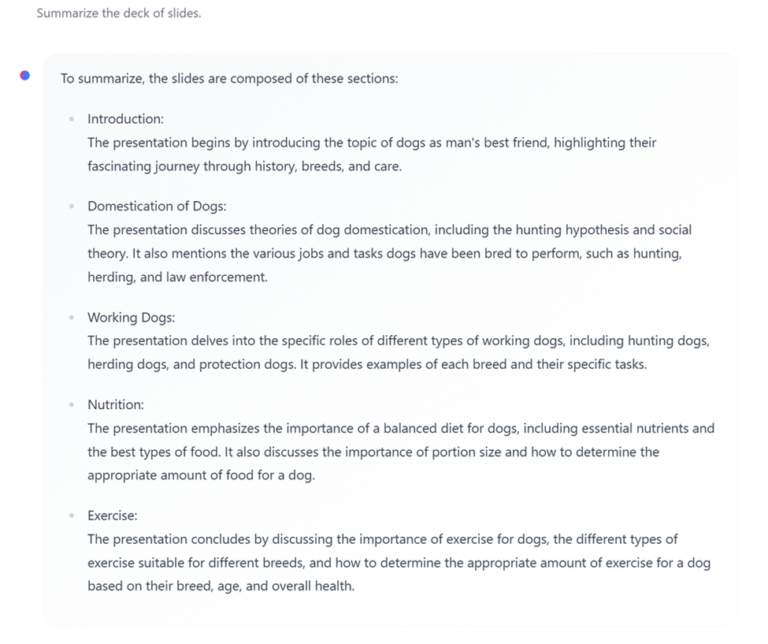

Phi-3.5-vision introduces cutting-edge capabilities for multi-frame image understanding and reasoning, developed based on invaluable customer feedback. This innovation empowers detailed image comparison, multi-image summarization/storytelling, and video summarization, offering a wide array of applications across various scenarios.

Analyzing multiple images of different dog breeds, Phi-3.5-vision could generate detailed comparisons of their physical characteristics and temperaments, enriching the section on “Dog Breeds.

By processing images depicting various dog activities (playing, working, training), the model could create compelling narratives highlighting the versatility and intelligence of dog

Safety

The Phi-3 family of models were developed in accordance with the Microsoft Responsible AI Standard, which is a company-wide set of requirements based on the following six principles: accountability, transparency, fairness, reliability and safety, privacy and security, and inclusiveness. Like the previous Phi-3 models, a multi-faceted safety evaluation and safety post-training approach was adopted, with additional measures taken to account for multi-lingual capabilities of this release. Our approach to safety training and evaluations including testing across multiple languages and risk categories is outlined in the Phi-3 Safety Post-Training Paper. While the Phi-3 models benefit from this approach, developers should apply responsible AI best practices, including mapping, measuring, and mitigating risks associated with their specific use case and cultural and linguistic context.

Availability and Accessibility

Now, Microsoft’s Azure AI services made PHI 3.5 available to bigger global businesses and developers while thinking of the users who would benefit from integrating. The model can be fitted across applications, from chatbots and v-assistants, to translation services and content generation tools. Microsoft continues to make this AI available to businesses without end-users needing to know how the model is trained or works under the hood.

For those interested in diving deeper into PHI 3.5, Microsoft has shared detailed documentation and resources, including extensive guides on how the model can be integrated into various use cases. Additionally, PHI 3.5 can be made available for developers to access on the Azure platform to experiment with and deploy the model in their applications.

The design of PHI 3.5 has been underpinned by the robust AI architecture of Microsoft, leveraging cutting-edge innovations in deep learning and transformer-based architectures. The model has been trained with extremely large datasets specially curated to cover as many languages and special content needs as possible. This type of fine-tuned training carries PHI 3.5 to deliver not only general-language tasks but also high accuracy in even more niche, specialized applications.

Moreover, employing advanced fine-tuning techniques, PHI 3.5 is able to learn new languages and domains with minimal re-training. This flexibility is an important variable for businesses that must deal with the rapidly changing needs of the market and with language diversity.

PHI 3.5 has quite a number of applications in real life. For example, in the healthcare industry, the model offered can be utilized to handle and process medical records in various languages; consequently, fast and accurate care would be offered to patients from very varied linguistic backgrounds. In the financial sector, PHI 3.5 would be useful for the generation of precise reports and analyses of markets and languages.

Furthermore, multilingual capability is the best fit with any international customer service operation coming from PHI 3.5. By understanding and responding to customer queries in their native language, businesses can significantly boost customer satisfaction and loyalty.

Phi-3.5-mini stands out in the LLM landscape with its impressive combination of size, context length, and multilingual capabilities. Despite its modest 3.8B parameters, it boasts a substantial 128K context length and supports multiple languages. This unique balance between broad language support and focused English performance makes Phi-3.5-mini a significant milestone in efficient, multilingual models.

While its small size may result in a higher density of English knowledge compared to other languages, Phi-3.5-mini can be effectively used for multilingual, knowledge-intensive tasks through a Retrieval-Augmented Generation (RAG) setup. By leveraging external data sources, RAG can significantly enhance the model’s performance across different languages, mitigating the limitations imposed by its compact architecture.

Phi-3.5-MoE, with its 16 small experts, offers high-quality performance, reduced latency, and supports 128K context length and multiple languages with strong safety measures. It outperforms larger models and can be customized for various applications through fine-tuning, all while maintaining efficiency with 6.6B active parameters.

Phi-3.5-vision introduces advancements in multi-frame image understanding and reasoning, enhancing single-image benchmark performance.

The Phi-3.5 model family provides cost-effective, high-capability options for the open-source community and Azure customers, pushing the boundaries of small language models and generative AI.

For more detailed discussion on PHI 3.5 by Microsoft, more information is available in the original article by AI Azure blog post.