Re-birth of the game changer: SAM 2 (Segment Anything Model) 2 by Meta AI

September 16, 2024

Gizem Argunşah

Strong Real-Time Object Tracking for Versatile Applications

This AI wonder smoothly tracks objects across steady video frames in real-time, thereby opening the doors to a plethora of video editing applications and those that involve augmented reality. Building over the success of the first Segment Anything Model (SAM), at its core, SAM 2 levers superlative performance and efficiency to make visual data annotatable for training computer vision systems. This opens up novel ways for the selection and interaction with objects on a live or in real-time video.

Key Features of SAM 2

Object Selection and Adjustment: Generalizing SAM’s prompt-based object segmentation to work on object tracks across frames of videos.

Robust Segmentation of Unfamiliar Videos: Zero-shot generalization to segmenting objects, images, and videos from unseen domains makes it useful for various real-world applications.

Real-Time Interactivity: Streaming memory architecture processes video frames one by one, enabling real-time interactivity.

Performance Improvements

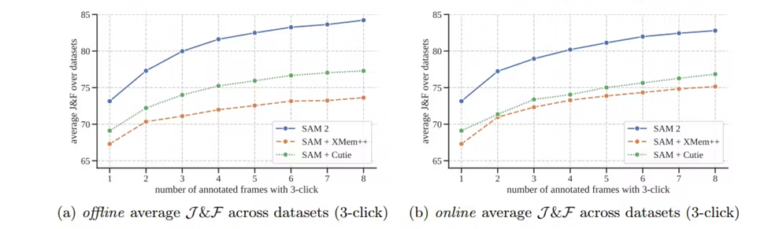

SAM 2 sets a new benchmark in the segmentation of videos and images. It has been proven to be more accurate in video segmentation, with its number of interactions reduced by a factor of three; video annotations have been sped up by 8x. For image segmentation, it is also more accurate than the previous model and six times faster than its predecessor, SAM.

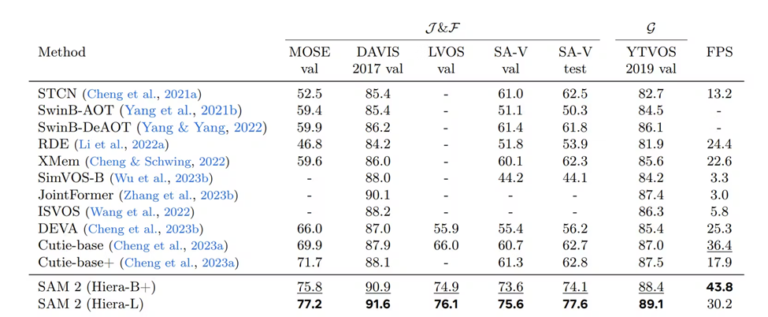

SAM 2 (Hiera-B+ and Hiera-L) outperforms existing state-of-the-art (SOTA) models on various video object segmentation benchmarks, including MOSE, DAVIS, LVOS, SA-V, and YTVOS, while also maintaining a competitive inference speed.

Meta AI points out that the influence of SAM 2 goes beyond simple video segmentation: “We believe our data, model and insights will be a huge milestone for video segmentation and related perception tasks.”

Architectural Innovations in SAM 2

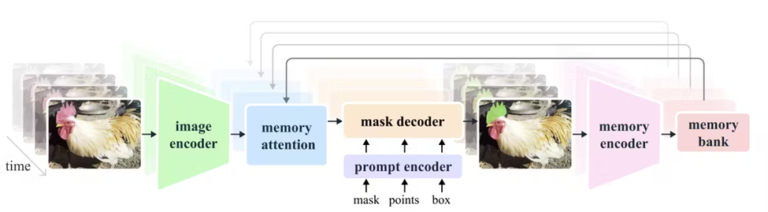

Frame Embeddings and Memory Conditioning: This enables SAM 2 to condition frame embeddings on the outputs of previous frames and prompt frames, so that it is able to understand the history and motion of objects.

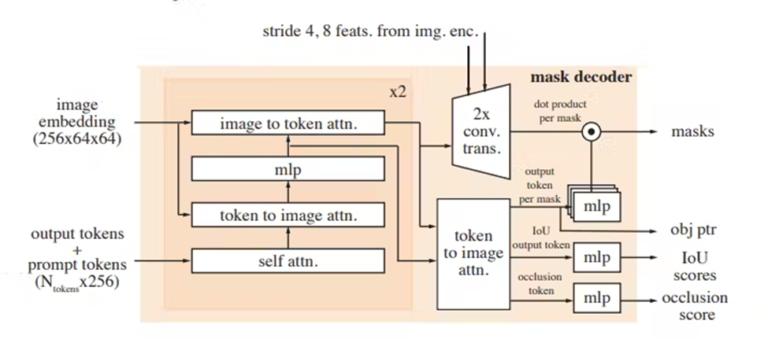

The architecture of SAM 2, illustrating the flow of image and prompt embeddings through the image encoder, mask decoder, and various attention mechanisms to generate object masks and associated confidence scores.

Memory Encoder and Memory Bank: Manages frame memory based on the current prediction and stores it in a memory bank where a FIFO (first in first out) queue is implemented. Very short-term temporal position information is embedded into the memories of recent frames so that short-term object motion will be captured.

Image Encoder: An image encoder that processes video frames in order and constructs multiscale feature embeddings of every frame.

Memory Attention Mechanism: It conditions the current frame features on past frame features, predictions, and new prompts by means of a stack of transformer blocks, effectively uniting history and context.

SAM 2’s architecture enables real-time object tracking and segmentation in videos by maintaining temporal consistency across frames. It uses image and prompt embeddings, memory attention, and a mask decoder to generate object masks.

Technical Training and Dataset

SAM 2 is finetuned on the pre-trained SA-1B, with a pre-training MAE of Hiera image encoder. Detectors are implemented on top by filtering out masks that cover more than 90% of the image and by training on 64 randomly sampled masks with full training mixes, including SA-V+Internal and SA-1B, together with open-source AI video datasets, like DAVIS, MOSE, and YouTubeVOS. So, this fully trained approach can guarantee effective segmentation and tracking along video frames, as well as allow interactive refinements.

This SA-V dataset has around 600,000+ masklets, drawn from around 51,000 videos obtained from 47 countries. This shows how the dataset is capable of imitating realistic settings for robust model performance.

Limitations of SAM 2

● Segmenting Across Shot Changes: SAM 2 may struggle to maintain object segmentation across changes in video shots.

● Crowded Scenes: The model can lose track of or confuse objects in densely populated scenes.

● Long Occlusions: Prolonged occlusions can lead to errors or loss of object tracking.

● Fine Details: Challenges arise with objects that have very thin or fine details, especially if they move quickly.

● Inter-Object Communication: SAM 2 processes multiple objects separately without shared object-level contextual information.

● Quality Verification: The current reliance on human annotators for verifying masklet quality and correcting errors could be improved with automation.

While promising, SAM 2 undoubtedly sets up a revolution in fields such as AR, VR, robotics, autonomous vehicles, video editing, and lots of other application domains where accurate temporal localization is required, surpassing image-level segmentation. Following this usability treatment, its video object segmentation outputs may hopefully be used as inputs to modern video generation models so that precise editing becomes possible. Extension with new types of input prompts is something that can be allowed in order to foster creative interactions in a real-time or live video context.

SAM 2 has the best accuracy, best efficiency, and is the fastest in many video and image segmentation tasks. Real-time interactivity and robust performance on such tasks are going to be some of the fields that SAM 2 can easily develop, promising transforming the experiences in different sectors. Now, as SAM 2 is available under an Apache 2.0 license, summarily, innovation and development in computer vision systems across the AI community will not know bounds. The link to the GitHub repository can be found here.