29.07.2025

How Much Memory Does Your LLM Really Need? A Practical Guide to Inference VRAM Consumption

How much VRAM do LLMs really need? A practical guide to inference memory sizing—model weights, KV cache, activations—with formulas and a quick estimator. Compare FP16/INT8/INT4 footprints to right‑size GPUs, control costs, and optimize deployment.

Navigation

The Fundamental Relationship Between Large Language Models and GPU Memory

While Large Language Models (LLMs) have revolutionized the field of AI, this revolution comes with a significant computational cost. Central to this cost is the massive memory required to run the models and the hardware needed to support this demand: Graphics Processing Units (GPUs). Understanding the relationship between the number of parameters in an LLM and the GPU memory (VRAM) it requires is critical for developers, researchers, and institutions seeking to effectively deploy, train, and optimize this technology. This article analyzes three core components of GPU memory usage and equips you with practical formulas to estimate your model’s VRAM requirements accurately.

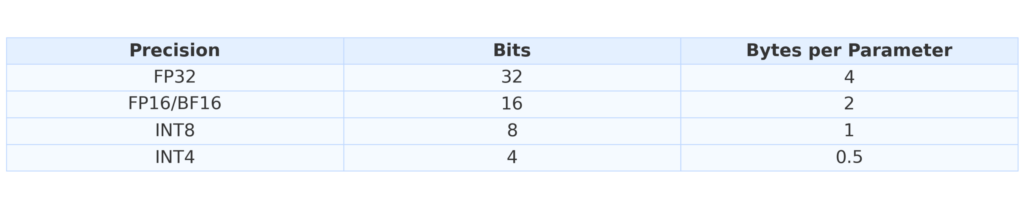

Precision Matters: Bytes per Parameter

The numerical precision you use for storing model weights plays a major role in determining memory footprint:

FP16/BF16 is standard for inference and training however, quantization (switching to INT8 or INT4) can dramatically reduce VRAM usage, often with minimal impact on performance.

Memory Component 1: Model Weights

During inference, GPU VRAM is primarily consumed by three main components: Model weights, the KV cache and activations.

Model weights is the static and typically largest memory component, containing billions of model parameters. Its size is directly proportional to the number of model parameters and the numerical precision used to store these parameters.

Calculating Memory for Model Weights

The basic formula for calculating the memory occupied by model weights is quite simple:

Model Weights(GB) = Number of Parameters(Billion) × Bytes per Parameter

The total size of these weights is directly proportional to the number of parameters and their precision.

For example, for a model with 7 billion (7B) parameters, the memory requirements at different precision levels would be as follows:

- FP16 (16-bit, 2 bytes per parameter): 7×2=14 GB

- INT8 (8-bit, 1 byte per parameter): 7×1=7 GB

- INT4 (4-bit, 0.5 bytes per parameter): 7×0.5=3.5 GB

Memory Component 2: KV Cache

KV (Key-Value) cache is a dynamic memory area used to improve performance during autoregressive text generation. The attention mechanism in the Transformer architecture stores the key and value vectors of previously processed tokens in this cache. This eliminates the need to recalculate these values for past tokens each time a new token is generated.

The KV cache represents a hidden but significant source of memory usage during transformer inference, especially in models with long context lengths. Unlike model weights, which remain constant, the size of the KV cache grows dynamically based on several factors: the batch size b (number of input sequences processed simultaneously), context length s (number of tokens the model retains), the number of transformer layers l, the hidden size of each attention head h, and the number of attention heads nh. The approximate memory requirement for the KV cache can be calculated using the formula:

KV Memory ≈ b × s × l × h × nh × 2 × Bytes per Parameter

where the factor of 2 accounts for both the key and value tensors stored for each token. This memory footprint scales linearly with context length and is especially impactful at larger scales. For instance, in the LLaMA-2 7B model, the KV cache consumes approximately 0.5 MB per token, meaning a 28,000-token context can demand around 14 GB—equivalent to the memory needed for the model’s weights. The situation becomes even more extreme with models like LLaMA-3.1 70B, where the KV cache alone can grow to 40 GB, significantly exceeding the static weight memory. This makes careful management of context length and batching crucial in resource-constrained environments.

Memory Component 3: Activations & Overhea

Activations and temporary buffers are memory areas where intermediate computations and outputs (activations) between layers of the model are stored. Temporary buffers are also used for operations such as matrix multiplications. These components typically account for a smaller portion of total memory usage and are often considered an overall “overhead.”

In addition to model weights and the KV cache, overhead such as activations, temporary computation buffers, and memory fragmentation caused by the operating system and CUDA libraries also consume VRAM. A practical approach to account for these overheads is to add an additional 10% to 20% to the total calculated memory requirement.

Total Inference Memory: Combined Formula

Given this information, a more comprehensive inference estimation formula can be created as follows:

Total Memory = (Model Weights + KV Memory) × 1.2

Bonus: Memory Estimation Shortcut

For a quick estimation of total GPU memory requirements during inference:

M = ( P * 4B)(32/Q) * 1.2

where M is the total GPU memory in gigabytes, P is the number of model parameters, Q is the precision in bits (e.g., 16 for FP16), and the factor 1.2 accounts for typical system overheads such as activations, buffers, and memory fragmentation. This formula helps approximate the total VRAM consumption without separately calculating model weights and other components. For example, applying the formula to a 7-billion-parameter model (7B) at FP16 precision gives:

M = (7 × 4) × (32 / 16) × 1.2 = 28 × 2 × 1.2 = 33.6 GB.

This quick estimation aligns closely with real-world memory usage patterns and is especially useful for rapid feasibility checks on available hardware.

Conclusion

Understanding VRAM consumption isn’t just theoretical—it’s essential for deploying scalable and efficient LLM systems. With these formulas and heuristics, you’ll be better equipped to manage memory constraints, optimize inference pipelines, and choose the right deployment strategy for your model.

Keep Reading

AI That Works Like You. Get Started Today!

Get in Touch to Access Your Free Demo