Navigation

Anthropic is turning its “autonomous coding” vision into practice with Claude Sonnet 4.5. According to the company, the model can stay on task for 30+ hours in a single run without losing focus. In that span, it doesn’t just write code; it provisions the database, completes configurations, and brings an application end-to-end to life. Early enterprise pilots suggest a clear step up versus the previous generation.

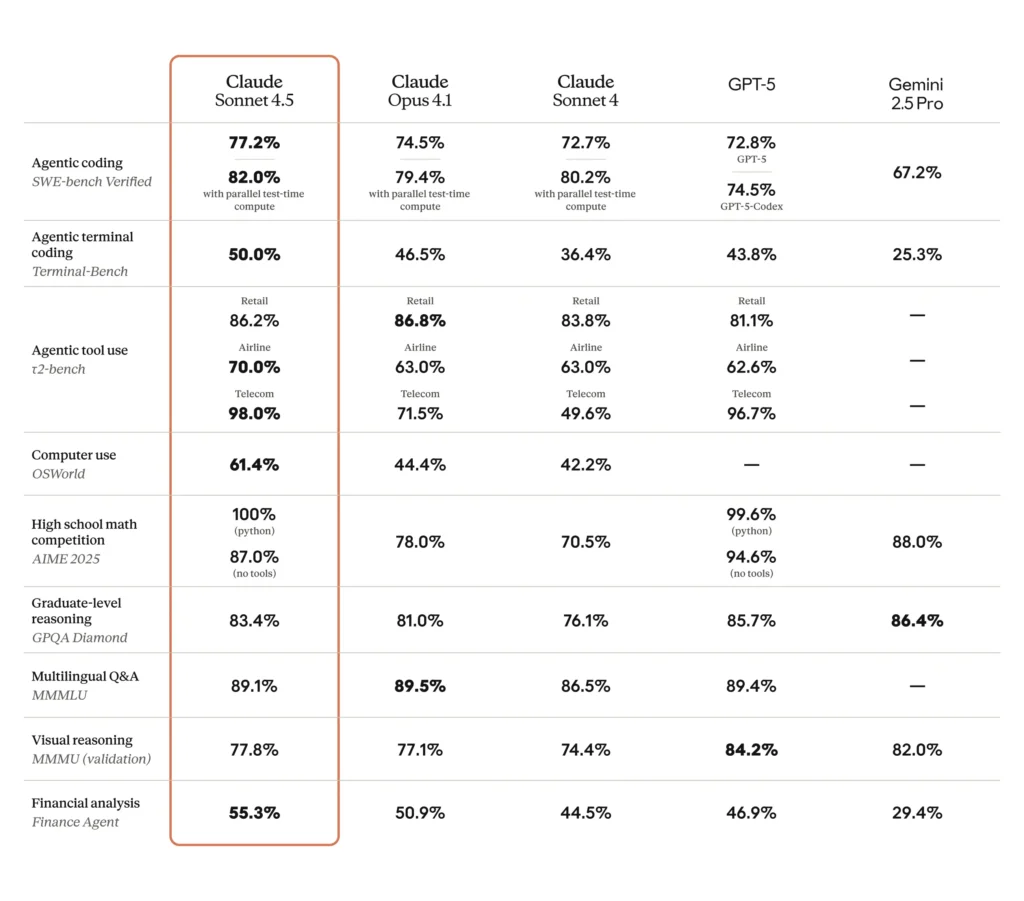

Performance: Claiming the top spot on SWE-bench and OSWorld

On real-world benchmarks, the picture is equally clear. Sonnet 4.5 leads the SWE-bench Verified task with 77.2%, which measures bug-fixing against real repos and issues. On OSWorld, which evaluates a model’s computer-use capabilities, Sonnet 4.5 reaches 61.4%. Considering Sonnet 4 topped the same OSWorld test at around 42% just four months earlier, the jump points to more reliable multi-step execution on an actual computer, not just synthetic gains.

For developers: Agent SDK and Claude Code updates

Two developer-facing pieces stand out. First, the Claude Agent SDK. Anthropic is opening up the agentic substrate behind Sonnet 4.5, giving teams a framework for long-task memory management, permissioning and autonomy control, and multi-agent coordination. Second, Imagine with Claude, a time-boxed research preview designed to showcase real-time software creation without boilerplate. Together, these push agentic scenarios beyond “demo-ware” and closer to production.

No surprises on pricing: Sonnet 4.5 ships at the same rates as Sonnet 4 $3 per 1M input tokens and $15 per 1M output tokens. That matters for teams seeking stronger capabilities without blowing up cost forecasts. The model is available today via the Claude API and chat interface.

Safety & alignment: ASL-3 protections and injection defenses

Safety and alignment sit at the center of the launch. Anthropic positions Sonnet 4.5 as its most aligned frontier model to date. The company reports reductions in sycophancy, deception, and power-seeking behaviors, plus advances in prompt-injection defenses and ASL-3 protections. For compliance, audit, and security stakeholders, these notes can lower barriers to adopting agentic workflows.

Practical benefits for enterprises

What does this look like in practice? For a mid-size team modernizing legacy services, Sonnet 4.5 can proceed in a single long run from repo scanning and dependency cleanup to turning tests green and updating CI. In an analyst scenario, the model can download files in the browser, transform spreadsheets, and produce a concise summary report in the same session. In short, this is about effective computer use, not just text generation.

Anthropic’s Agent SDK fills in the governance and operability gaps for production: checkpoint/rollback, permission policies, and multi-agent orchestration give engineering leaders a workable path from PoC to rollout.

- Code modernization: Multi-step refactors (e.g., decomposing a monolith) can run end-to-end. Checkpoints and permissions systematize control points and safe rollbacks.

- Data-to-report loops: Browser-based “download-clean-report” cycles for finance/ops can be executed in one continuous session.

- Agent portfolio: With sub-agents (e.g., backend API setup in parallel with frontend scaffolding), the SDK enables concurrent flows under enterprise permission schemes.

Conclusion

Claude Sonnet 4.5 is one of the first models to back up the “autonomous coding” story with concrete examples. 30+ hour runs, 61.4% on OSWorld, and strong performance in real-repo contexts indicate a system that can actually use a computer to get work done. At the same time, the Agent SDK with permissions, checkpoints, and multi-agent coordination offers the governance layer enterprises need to move from PoC to production.

With the model landscape evolving fast, Anthropic’s approach, more capability and tighter safety at the same price keeps the competitive race hot. For engineering leaders, the imperative is clear: start with a narrow, end-to-end pilot, measure whether the agent truly ships the task, then scale with permissions, rollback, and auditability across larger codebases.

Keep Reading

AI That Works Like You. Get Started Today!

Get in Touch to Access Your Free Demo