What is RAG?

The article explains what RAG is, how it works.

December 5, 2023

Gizem Argunşah

This article is about RAG, an approach that enables large language models (LLMs) to access and use current and accurate information from an external knowledge source to generate answers. The article explains what RAG is, how it works. The article also discusses the advantages of RAG over the traditional fine-tuning approach of LLMs and the use cases of RAG for business and entertainment purposes.

What is RAG and How Does It Enhance Large Language Models

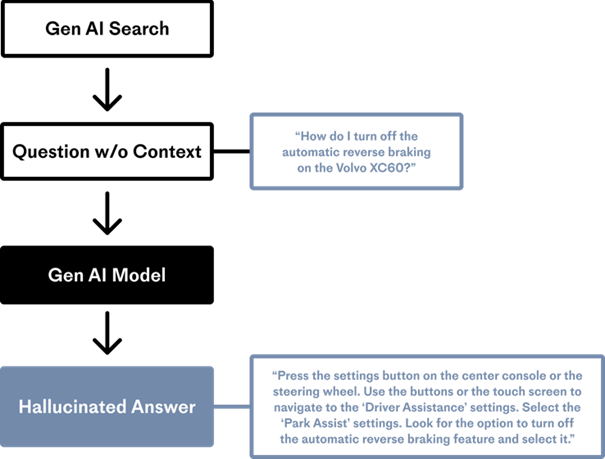

Large language models (LLMs) are one of the most powerful and versatile artificial intelligence systems in natural language processing (NLP). LLMs can learn from a huge amount of text data and perform various tasks such as question answering, text summarization, text generation, and more. However, LLMs also have some limitations that can affect their quality, reliability, and transparency. One of these limitations is the knowledge gap, which means that LLMs cannot access new or updated information beyond their training data. Another limitation is the lack of explainability, which means that LLMs cannot show the source or accuracy of their answers to users.

In this article, we will introduce RAG, which stands for retrieval-augmented generation, as an approach that enables LLMs to work with your own data along with their training data. We will explain what RAG is, how it works, and how it can be used with Azure Machine Learning. We will also discuss the advantages of RAG over the traditional fine-tuning approach of LLMs and the use cases of RAG for business and entertainment purposes.

What is RAG?

RAG is an artificial intelligence framework that enables LLMs to access current and accurate information from an external knowledge source (such as a database or a web page) and use this information to generate answers. RAG consists of two main components: A retrieval system and a generation system. The retrieval system queries the external knowledge source to find the most relevant facts for the user’s question. The generation system uses the retrieved facts to generate an answer. In this way, RAG enables LLMs to generate both knowledge-based and creative answers.

RAG is like the difference between an open-book and a closed-book exam. In a RAG system, when you ask a question to the model, the model answers by browsing through the content in a book, rather than trying to remember facts from memory.

How does RAG work?

RAG works by combining the strengths of two types of LLMs: Dense retrieval models and generative models. Dense retrieval models are LLMs that can efficiently search through a large collection of documents and find the most relevant ones for a given query. Generative models are LLMs that can produce fluent and coherent texts for a given context. RAG uses a dense retrieval model to find the most relevant facts from an external knowledge source and a generative model to use these facts to generate an answer.

The workflow of RAG is as follows:

- The user inputs a question to the RAG system.

- The RAG system encodes the question into a vector representation using the dense retrieval model.

- The RAG system compares the question vector with the document vectors in the external knowledge source and retrieves the top-k most similar documents.

- The RAG system concatenates the question and the retrieved documents into a single context and feeds it to the generative model.

- The RAG system generates an answer using the generative model and outputs it to the user.

What are the advantages of RAG?

RAG has several advantages over the traditional fine-tuning approach of LLMs, which involves training an LLM on a specific task or domain using a limited amount of data. Some of these advantages are:

- RAG enables LLMs to work with your own data along with their training data. This allows LLMs to generate more specific, customized, and relevant answers. For example, you can give your own company’s data to an LLM with RAG and get answers about your company.

- RAG enables LLMs to access current and accurate information. This allows LLMs to generate more reliable and consistent answers. For example, you can give updated news or statistics to an LLM with RAG and make the LLM use this information.

- RAG enables LLMs to show the source and accuracy of their answers. This allows LLMs to generate more transparent and accountable answers. For example, when you ask a question to an LLM with RAG, the LLM can tell you where the answer came from and how reliable the source is.

Conclusion

RAG is an approach that enables LLMs to access current and accurate information and use this information to generate answers. RAG improves the quality, reliability, and transparency of LLMs. RAG is also available in Skymod AI and allows you to use your own data with LLMs. RAG enables LLMs to be used more effectively for business and entertainment purposes. If you are interested in learning more about RAG, you can read our paper. If you have any questions or feedback, feel free to contact us. We hope you enjoy using RAG and LLMs. Thank you for reading.