27.06.2025

vLLM vs LLM: The New Era of LLM Serving

Meet vLLM — the next step in efficient LLM serving! Powered by PagedAttention, it delivers faster inference, reduced latency, and optimized GPU memory. Ideal for RAG systems, chatbots, and high-throughput content generation.

Navigation

Having emerged in mainstream technology in the past couple years, Large Language Models or LLMs have brought fast access to knowledge and paved the way to major technological developments. To the average user, LLMs may seem efficient however, they come with large energy consumption and require a large number of GPUs. Together with the large amount of hardware comes latency in requests, due to the current architecture employed by most products out there. This has led to the search for a different architecture that would handle memory more efficiently in a way that we have not seen before. Enter vLLM or Virtual Large Language Model which allows LLMs to perform calculations more efficiently.

What is vLLM?

vLLM is an open source library for fast LLM inference and serving, utilizing PagedAttention which more effectively manages GPU memory, more specifically attention keys and values. It was first introduced by researchers from UC Berkeley, Stanford University and UC San Diego in a paper called “Efficient Memory Management for Large Language Model Serving with PagedAttention”. The paper makes contributions about the struggles of current LLMs in terms of efficiency and scalability, and offers a solution on how GPUs should be utilized instead.

How is vLLM different from traditional LLMs?

Before diving into vLLMs it is key to understand how LLM models and their memory system works as we know them. At the core of LLMs lies a Transformers model, which uses an auto regressive decoder to generate one token at a time based on the input (or the prompt) and the previous sequence of the output’s tokens it has generated so far. For each request token generation is performed until the model returns a termination token. The paper asserts that this sequence makes the process memory-bound, which underutilizes the computational capabilities of GPUs and limits the serving throughput. Above half of the memory is taken by model weights which are static and a smaller portion is taken up by dynamic states of the requests. In Transformers these dynamic states are called key and value tensors associated with the attention mechanism, commonly referred to as KV cache which represents the context from earlier tokens. KV cache is a structure that dynamically grows and shrinks over time based on the number of tokens generated. When not managed efficiently this leads to limited batch size and consequently lower throughput. Generally current systems are hindered by two major inefficiencies; first one being internal and external memory fragmentation, and the second being the inability to exploit opportunities for memory sharing.

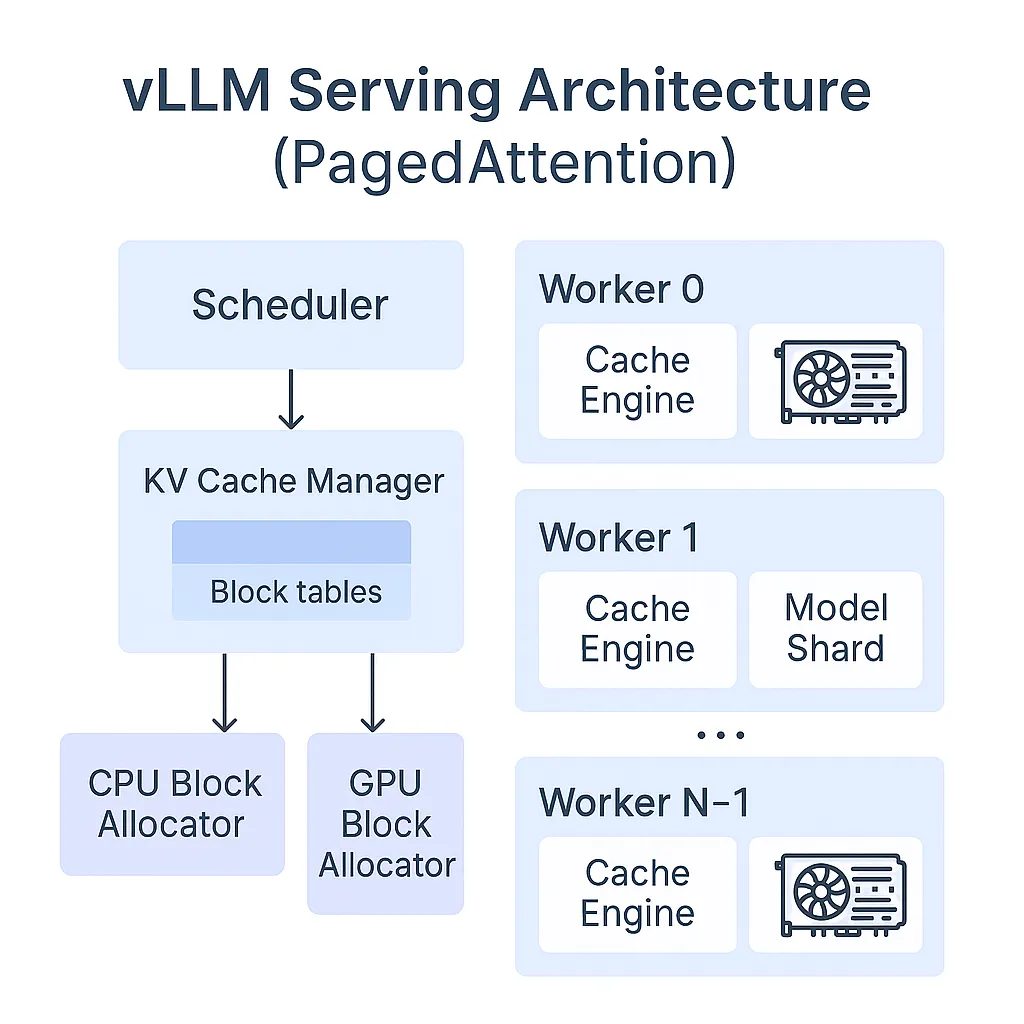

vLLM addresses these bottlenecks by introducing a novel technique: PagedAttention. Inspired by the concept of virtual

memory with paging inoperating systems, PagedAttention differs from conventional attention mechanisms by enabling non-contiguous storage of key and value tensors. Instead of storing the KV cache as a single continuous chunk, it divides it into fixed-size blocks, each representing a set number of tokens. When performing attention, the PagedAttention kernel efficiently locates and retrieves only the relevant KV blocks needed for computation.

Moreover, vLLM supports popular LLMs such as GPT, OPT, LLaMA of varying sizes, and according to the evaluations done in the paper vLLM improves LLM serving throughput by 2-4× compared to cutting-edge LLMs with no negative affect to model accuracy.

vLLM provides a significant advantage in parallel sampling where multiple output sequences are generated from the same prompt, in which case computation and memory for the prompt can be shared between the output sequences. vLLM can realize this sharing easily and save memory. Similarly, vLLM has led to higher efficiency, throughput and lower latency in beam search through parallel beam processing.

Benchmark Comparison: vLLM vs Hugging Face Transformers vs TGI

Developers of vLLM have compared the throughput of vLLM with Hugging Face Transformers and Text Generation Inference, and have seen that vLLM achieves up to 24x higher throughput compared to HF and up to 3.5x higher throughput than TGI. These results highlight how vLLM fundamentally changes performance in LLM inference and serving. Transformers do not yield high throughput, and they are improved by TGI which still falls short to vLLM serving as seen in the image provided by the vLLM blog.

Use cases for vLLMs

The level of performance improvement achieved makes vLLM ideal for high-throughput generation

environments such as

● RAG systems

● Chatbot and virtual assistants

● Content generation

● Automated translation

Closing remarks

vLLM stands out by rethinking how memory and computation are managed during inference. Through the innovation of PagedAttention it significantly improves throughput, responsiveness and resource efficiency. It’s fair to say that vLLMs are poised to play a growing role in the future of LLM deployment, as the demand for scalable, efficient inference continues to rise.

References

https://docs.vllm.ai/en/latest/

https://blog.vllm.ai/2023/06/20/vllm.html

https://dl.acm.org/doi/abs/10.1145/3600006.3613165

Keep Reading

AI That Works Like You. Get Started Today!

Get in Touch to Access Your Free Demo